将图片变成patch

使用einops能够很简单地实现图片变成patch的功能。

这里为了简单地可视化,将每个patch pad一定的值以示区分。

import einops

import numpy as np

import mmcv

import cv2

from PIL import Image

img = mmcv.imread(r'C:\Users\czh\Desktop\test.png', channel_order='rgb')

h, w, c = img.shape

p = 64 # patch_size

pad = 2

img2 = cv2.resize(img, (w // p * p, h // p * p))

img_patches = einops.rearrange(img2, '(h h1) (w w1) c -> (h w) c h1 w1', h1=p, w1=p)

img_patches = torch.tensor(img_patches)

padded_pathes = F.pad(img_patches, [pad]*4, mode='constant', value=255)

img_padded = einops.rearrange(padded_pathes, '(h w) c h1 w1 -> (h h1) (w w1) c', h1=p+pad*2, w1=p+pad*2, h=img2.shape[0]//p)

Image.fromarray(img_padded.numpy())

原始图片:

变成patch之后的图片(可视化):

在ViT中,是直接使用

nn.Conv2d进行卷积操作,上面只是一个可视化。

其他的实现方法

当然也可以利用F.unfold和F.fold实现,只是这样就会很麻烦,需要很多次reshape和permute以保证正确的维度。

具体地,比如将feature map变成token也是相同的做法,可以参考MobileViT v2的代码。

img_tensor = torch.from_numpy(img2)[None, ...].permute(0, -1, 1, 2).float() # [B, C, H, W]

B, C, H, W = img_tensor.shape # 这里因为只有一张图片,所以B = 1

# unfold成patch,只不过将C和patch_size合成了一个维度

img_patches = F.unfold(img_tensor, kernel_size=(p, p), stride=(p, p)) # [B, p*p*C, H/p*W/p] or [B, C*patch_size, num_patch]

num_patch = img_patches.shape[-1]

img_patches = img_patches.reshape(B, C, p, p, num_patch).permute(0, -1, 1, 2, 3).reshape(B*num_patch, C, p, p)

# padding

padded_pathes = F.pad(img_patches, [pad]*4, mode='constant', value=255) # [B*num_patch, C, p+2*pad, p+2*pad]

pad_h = pad_w = p + 2 * pad

# 保证维度正确

padded_pathes = padded_pathes.reshape(B, num_patch, C, pad_h, pad_w).permute(0, 2, -2, -1, 1).reshape(B, C*pad_h*pad_w, num_patch)

img_padded_h = H // p * (pad * 2 + p)

img_padded_w = W // p * (pad * 2 + p)

# 再fold成图像的形状

# 需要注意的是,fold的时候需要计算一下输出的大小output_size

img_padded = F.fold(padded_pathes, output_size=(img_padded_h, img_padded_w), kernel_size=(pad_h, pad_w), stride=(pad_h, pad_w))[0] # get 1 img

img_padded = img_padded.permute(1, 2, 0).to(torch.uint8)

Image.fromarray(img_padded.numpy())

还有利用F.conv2d和F.pixel_shuffle做的

weights = torch.eye(p * p, dtype=torch.float)

weights = weights.reshape(p * p, 1, p, p).repeat(C, 1, 1, 1) # [p*p*C, 1, p, p]

# groups必须被in_channel和out_channel同时整除

# 那么在做conv时,kernel[1, p, p]分别就是[1, 0, 0, ..., 0] [0, 1, 0, ..., 0]

# |0, 0, 0, ..., 0| |0, 0, 0, ..., 0|

# |. . . ... .| |. . . ... .| ...

# [0, 0, 0, ..., 0] [0, 0, 0, ..., 0]

# 也就是说做完卷积之后得到的张量的每一维都是对应kernel的1所在的位置乘以(等于)对应位置

# img的像素值,这样再做pixel_shuffle就能返回到原图

# 实际上就等价于img_patches = F.pixel_unshuffle(img_tensor, downscale_factor=p)

img_patches = F.conv2d(img_tensor, weights, bias=None, stride=(p, p), groups=C) # [B, p*p*C, H//p, W//p]

num_patch = H // p * W // p

img_patches = img_patches.reshape(B, C, p**2, H//p, W//p).permute(0, -2, -1, 1, 2).reshape(B*num_patch, C, p, p)

padded_pathes = F.pad(img_patches, [pad]*4, mode='constant', value=255) # [B*num_patch, C, p+2*pad, p+2*pad]

pad_h = pad_w = p + 2 * pad

padded_pathes = padded_pathes.reshape(B, num_patch, C, pad_h, pad_w).permute(0, 2, -2, -1, 1).reshape(B, C*pad_h*pad_w, H//p, W//p)

img_padded = F.pixel_shuffle(padded_pathes, upscale_factor=pad_h)[0].permute(-2, -1, 0).to(torch.uint8)

Image.fromarray(img_padded.numpy())

也能达到相同的效果。

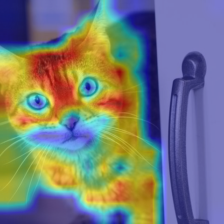

使用GradCAM可视化注意力图

这里使用pytorch_grad_cam包进行可视化操作。

首先导入模型

import timm

vit = timm.models.create_model('vit_tiny_patch16_224', pretrained=True)

原图如下:

再导入图片,定义转换操作

import numpy as np

import torch

from PIL import Image

import torchvision.transforms as T

from pytorch_grad_cam import GradCAM

from pytorch_grad_cam.utils.image import show_cam_on_image

from pytorch_grad_cam.utils.model_targets import ClassifierOutputTarget

import torch.nn.functional as F

import cv2

import random

def seed_every_thing(seed):

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

np.random.seed(seed)

random.seed(seed)

img = Image.open('C:/Users/czh/Desktop/cat.jpg')

transf_geo = T.Compose([

T.RandomCrop(480, pad_if_needed=True),

T.Resize(224)

])

transf_norm = T.Compose([

T.ToTensor(),

T.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

直接扔进网络进行预测

vit.eval()

seed = 298

torch.manual_seed(seed)

img_tensor = transf_norm(transf_geo(img))[None, ...]

vit(img_tensor).argmax()

这里先将crop之后的图片变成PIL Image

seed_every_thing(seed)

img_tensor = transf_norm(transf_geo(img))[None, ...]

normed_cropped_img = np.array(img_tensor[0].permute(1, 2, 0))

mean = np.array([0.485, 0.456, 0.406]).reshape(1, -1)

std = np.array([0.229, 0.224, 0.225]).reshape(1, -1)

uint8_img = ((normed_cropped_img * std + mean) * 255).astype('uint8')

Image.fromarray(uint8_img)

也可以使用F.grid_sample得到crop之后的图。

在使用F.grid_sample时,需要注意传入的h和w的顺序。具体可以看这个博客。

h, w = img.size

new_h = torch.linspace(-1, 1, h)

new_w = torch.linspace(-1, 1, w)

# 需要注意下面两句,输入的h和w是反的

x_grids, y_grids = torch.meshgrid(new_w, new_h)

grids2 = torch.cat([y_grids.unsqueeze(2), x_grids.unsqueeze(2)], dim=2)

raw_grid = grids2.permute(-1, 0, 1) # [2, h', w']

seed_every_thing(seed)

transf_grids = transf_geo(raw_grid) # [2, h', w']

img2 = torch.tensor(np.array(img)).unsqueeze(0).permute(0, 3, 1, 2).float() # [bs, 3, h, w]

cropped_img = F.grid_sample(img2, transf_grids[None, ...].permute(0, 2, 3, 1), align_corners=True).to(torch.uint8)[0].permute(1, 2, 0)

Image.fromarray(np.array(cropped_img))

在定义cam之前需要给出怎么变换得到的注意力图,因为注意力图的shape是[bs, num_token, dim],对于vit_tiny来说,输出的num_token为(224/16)^2=196,dim为192。

再构造grad cam之前,需要将shape转化为CNN特征图的形状:

def reshape_transform(tensor, height=14, width=14):

# 这里的1是为了去掉[CLS] token

# 这里的h和w是输出token组成的特征图的高和宽

result = tensor[:, 1 : , :].reshape(tensor.size(0),

height, width, tensor.size(2))

# Bring the channels to the first dimension,

# like in CNNs.

result = result.transpose(2, 3).transpose(1, 2)

return result

再构造grad cam和可视化

grad_cam = GradCAM(model=vit, target_layers=[vit.blocks[-1].norm1], reshape_transform=reshape_transform)

target = [ClassifierOutputTarget(281)] # 表示猫

cam = grad_cam(img_tensor, target)

cam = cam[0, :]

visualization = show_cam_on_image(uint8_img / 255, cam, use_rgb=True, colormap=cv2.COLORMAP_JET, image_weight=0.5)

Image.fromarray(visualization)